The Sweet Spot: How AI Augments (Not Replaces) Engineering Leadership

As engineering managers, we’re sometimes spending too much time on repetitive tasks when we should be focusing on strategy, people, and growth. This is a problem because it takes away from the time we need to be thinking about the big picture and making decisions that will impact the team and the company.

For example, your calendar says “cycle review prep” - 60 minutes blocked. But your brain knows the truth: 50 minutes copying data from Linear and Swarmia, 10 minutes of actual thinking.

This isn’t about being lazy. It’s about clarity. What if automation handled the structure so your brain could focus on the story?

Structure vs. story

I learned something from home automation that applies to everything I do as an engineering manager: there’s a sweet spot with automation.

Too much automation and you spoil reliable systems. Bad automation feels awkward and annoying. The technology should augment human capabilities, not replace them.

The same principle applies to engineering management.

Structure is what computers do well: logic, data, templates, patterns.

Story is what humans do well: emotion, judgment, context.

Computers fill in the logic. People add the meaning.

By judgment, I don’t mean simple decision-making - AI can do that. I mean the contextual, emotionally-aware understanding that comes from knowing your team, reading subtext, and applying experience across situations that don’t fit into patterns.

Get this balance wrong and you pay a price:

- Automate too little → you’re stuck in busy work with no mental space for thinking

- Automate too much → you break feedback loops, lose connection to the work, and your team stops seeing your leadership

The sweet spot is where you automate just enough to free your brain for what actually matters.

A concrete example: cycle review presentations

Every cycle, I run a team review meeting. We look at what we shipped, where we invested our time, what we learned, and what’s next.

Without automation this would take about an hour to complete:

- Create a new presentation deck

- Copy cycle information from Linear

- Paste investment allocation from Swarmia

- Format everything so it looks consistent

- Add titles, dates, team names

By the time I finished the mechanical work, my brain was tired. The actual thinking - synthesizing what we achieved, identifying struggles, framing learnings - felt like a chore instead of leadership.

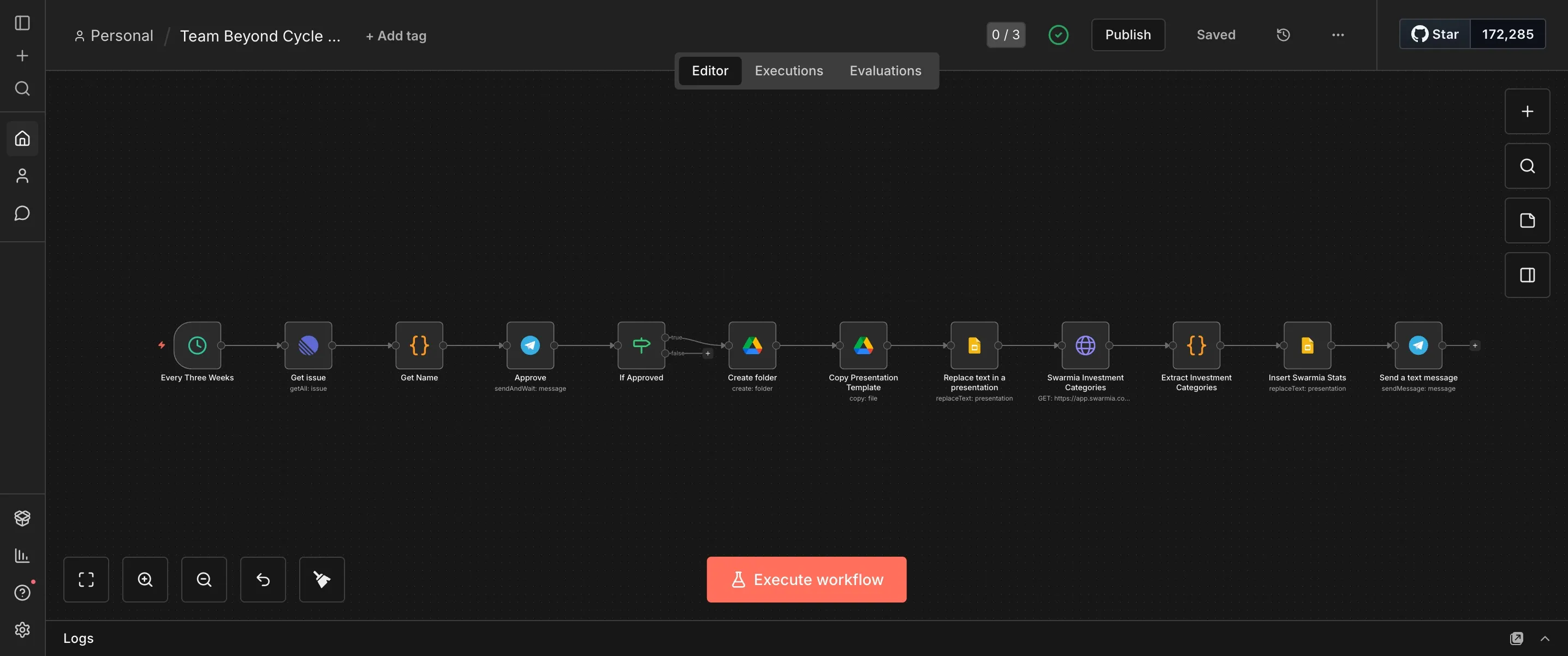

Instead, I built an n8n workflow that changed this.

On the day of the cycle review, I get a Telegram message: “Cycle review today - should I create the presentation?”

This human-in-the-loop step matters. Sometimes there’s no meeting (holidays, vacation, schedule changes). I want the reminder, but I want control.

When I confirm, the workflow:

- Pulls cycle data from Linear (tickets, status, metadata)

- Fetches investment categories from Swarmia (feature work vs. bugs vs. tech debt)

- Creates a new Google Slides deck from our template

- Fills in all the structure: cycle title, dates, ticket summaries, allocation charts

- Sends me a Telegram notification when it’s ready

The automation handles about 80% of the work.

The 20% I still do manually is everything that requires human judgment:

- How much did we actually achieve? (Not just ticket count, but impact - and impact depends on context AI doesn’t have)

- What goals do people on the team have? (Career growth, skill development - shaped by 1:1 conversations, not data)

- Where did we struggle? (Technical challenges, process friction, team dynamics - understanding the “why” behind the patterns)

- What did we learn? (Not just post-mortems, but insights that connect this cycle to what comes next in ways only I can see)

This split matters.

If AI wrote the achievements and learnings, this should be an email, not a meeting. The cycle review is a feedback loop. My synthesis is the leadership. The team needs to see that I’ve engaged with the work, not delegated it to an algorithm.

The results:

- I save about an hour of mechanical work every cycle

- More importantly: I have mental space to actually think instead of copy-paste

- My team didn’t notice anything different

The automation is invisible because the part they see - my judgment, my synthesis, my leadership - is still human.

How to find your sweet spot

Not every repetitive task should be automated. Here’s how I think about where to draw the line.

What requires judgment vs. what is mechanical?

Can a script determine this, or does it need context, relationships, history?

If you need to understand subtext, read between the lines, or apply experience that doesn’t fit into patterns, that’s human work. AI might suggest an answer, but it can’t know that someone’s low output this cycle is actually healthy growth because they’re learning to mentor.

If you’re just moving data from one place to another, that’s mechanical work.

What feedback loops matter?

If you automate this, do you lose connection to something important? Does your team need to see you doing this work?

In the cycle review example, if I automated the “what we learned” section, I’d lose the forcing function to actually reflect on the cycle. I’d also signal to the team that I’m not paying attention.

The automation would break the feedback loop that helps us grow.

Where does your brain add value?

Are you copy-pasting, or are you synthesizing?

Could you do this task half-asleep, or does it require thought?

If you’re operating on autopilot, automate it. If you’re thinking deeply, keep it human.

Red flags you’ve automated too much

- You feel disconnected from the output

- Your team asks “did you even look at this?”

- You can’t answer questions about the data

- The automation produces generic insights anyone could have written

Green lights you’re at the sweet spot

- You’re ready to engage with the output instead of avoiding the work

- Time saved goes to actual thinking and strategy

- Quality stays the same or improves

- Your team doesn’t notice the automation because your judgment is still visible

Automation for clarity

This isn’t really about saving time. It’s about freeing your brain to think.

Repetitive work clutters mental space. Every time you copy-paste from Linear, every time you format a slide, every time you align text boxes - that’s cognitive load. It accumulates.

By the time you get to the work that actually requires thinking, you’re already tired.

Automation gives you clarity. You see patterns you’d otherwise miss. You ask better questions. You challenge assumptions instead of just documenting data.

Processes - automated or not - help put structure to your work so you don’t get overwhelmed. Automation improves that because the boring things are handled and you can use your brain for analysis, for finding patterns, for strategy.

Not for busy work.

Where this goes next

One automation working well beats ten half-finished workflows.

Performance reviews are next on my list. Same pattern: gathering feedback and compiling examples is structure work. Synthesizing themes across conflicting feedback, calibrating what “good” means for each person’s unique context, understanding how someone’s growth trajectory connects to their career aspirations - that’s the story only a manager can tell.

The framework applies: automate the structure, preserve the story.

The bigger opportunity is helping others in the company find their own sweet spot. Not everyone needs to build n8n workflows, but everyone can benefit from asking: which parts of my work are structure, and which parts are story? What should I automate, and what must stay human?

When more people separate mechanical work from judgment work, the whole organization gets clearer about where human attention actually matters.

Closing

If you want to try this yourself, start with one thing. Pick a report or presentation you do regularly - something that makes you think “not this again” when it shows up on your calendar.

Map it out. What parts are just moving data around? That’s structure. What parts require you to understand context, relationships, or subtext? That’s story. Be honest about where your judgment actually adds value.

Then automate just the structure part.

For tools, n8n works well for workflow automation if you like visual interfaces. Most project management tools (Linear, Jira, GitHub, Swarmia) have APIs you can tap into. If you need help with synthesis, Claude or ChatGPT can be useful - but be careful here. This is exactly where over-automation breaks feedback loops.

The goal isn’t efficiency. It’s clarity. You want mental space to think, not just time saved.

If the automation feels awkward or disconnects you from your work, you’ve probably automated too much. Your team should still see your judgment in the output. The automation should be invisible to them.

What repetitive work is keeping you from thinking clearly?

About the author: Mirko Borivojevic is Team Lead & Senior Software Engineer at Hygraph, with 15+ years of experience across startups, biotech, and SaaS companies. He writes about engineering leadership at Behind the notebook.